AI in advertising: Building a Culture of Trust

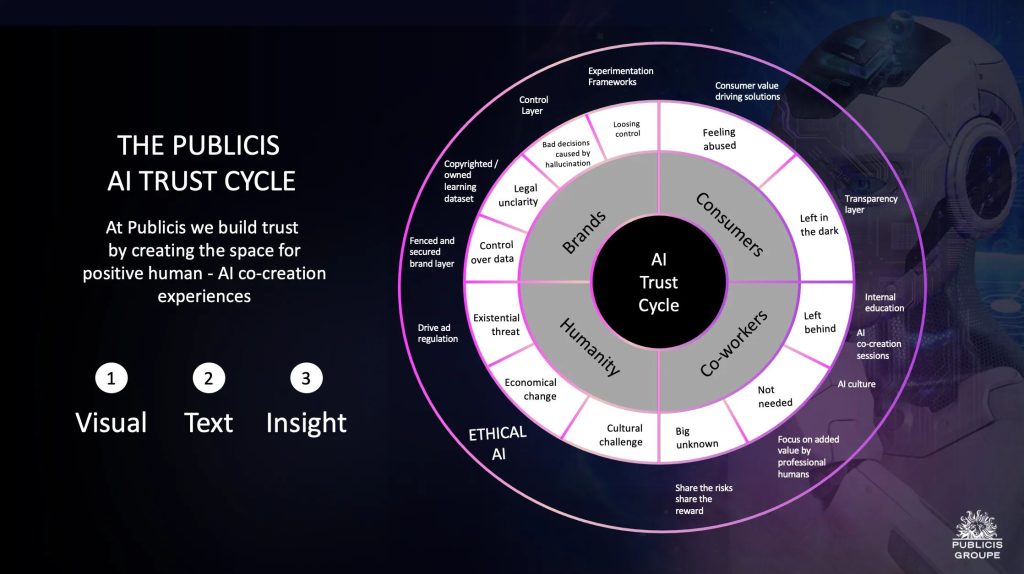

Interested in how AI’s shaking up the ad world? We’re diving into the trust issues and sketching out solutions that could work for everyone — brands, shoppers, employees, even the whole of humanity. And hey, don’t forget to scroll all the way down — there’s a presentation waiting for you, giving you a closer look at our cool Publicis AI Trust Cycle.

The AI Trust Crisis

Look around. AI is everywhere. And nowhere is this more apparent than in advertising. Yet, with all its promises, AI in advertising remains shrouded in mystery and uncertainty. So, why the trust issue?

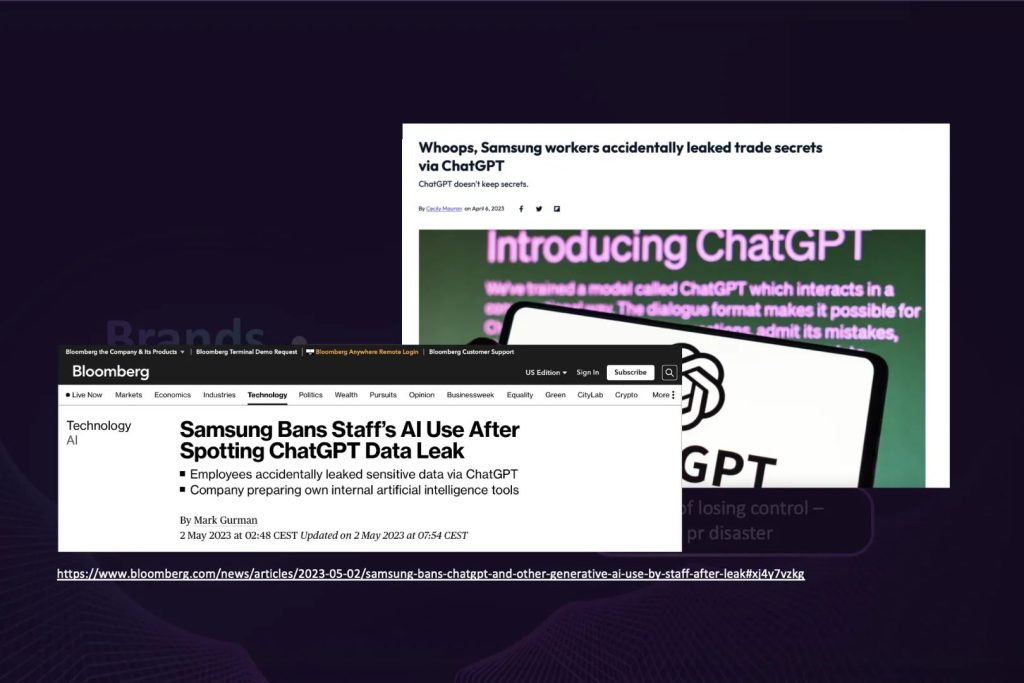

Let’s break it down, starting with the brands. Imagine you’re a brand manager, excited about AI and ready to dive in. But wait! There’s a risk: using AI might expose your brand’s sensitive data. One accidental leak could lead to a catastrophe, like Samsung’s embarrassing incident where staff unknowingly leaked sensitive code via an AI tool.

Consumers are another piece of the puzzle. These are the people at the receiving end of AI-driven advertising, yet they often have little understanding of what’s going on behind the scenes. This lack of transparency and understanding can lead to fear and mistrust.

Employees, particularly those in creative roles, are also struggling. On one hand, AI promises to simplify their work and drive innovation. On the other hand, it threatens to outperform them or even render their roles obsolete. It’s a tricky tightrope to walk.

And finally, there’s the broader concern affecting all of humanity. The fear of AI misuse, or even AI itself, can be intense. AI bots can be weaponized to manipulate public opinion, causing social instability. It’s like opening Pandora’s Box: the benefits are enticing, but the potential for catastrophe is hard to ignore.

Would you fly that plane?

Creating the AI Trust Cycle

So, how do we build trust in AI for advertising? We approach it holistically, considering each aspect of the trust issue. Let’s walk through each persona and propose potential solutions to inspire change.

Brands: How might we create a safe space for brands to experiment with AI on real-life use cases, resulting in positive ROI in a short time?

Start with secured and fenced databases to protect brand knowledge and intellectual property. For instance, OpenAI’s API data usage policy ensures brands’ data doesn’t contribute to the training datasets of models. Another innovative solution is the use of vector databases like Pinecone, which provides optimized storage and querying capabilities for machine-friendly brand information.

To address legal concerns, brands could consider using solutions where the training data is copyrighted. A case in point is the collaboration between bria.ai and gettyimages, where brands have access to a licensed dataset of images. Even better, Publicis has struck a deal with OpenAI, so every DALL-E generated image can be used by brands with no copyright worries.

Consumers: How might we communicate transparently to create consumer value driving AI solutions?

The first step is building a transparency layer through AI legislation, which clarifies how AI is used and how consumers’ data is protected. Another step could be to clearly explain the AI’s reasoning behind recommendations to help consumers understand and trust the process.

Employees: How might we create opportunities for human-driven and AI-supported co-creation experiences?

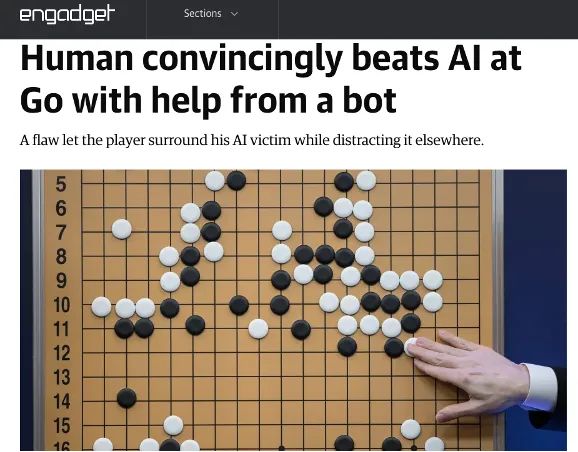

Here’s where education comes in. Providing internal AI education to employees can help dispel fear and mistrust. Co-creation is another key aspect. Remember the GO game example, where a human player, with the help of an AI, defeated a high-ranking AI system? It’s an inspiring reminder of how AI can amplify human creativity, rather than replace it.

Humanity: How might we ensure we respect consumer privacy and manage their data to create more value for them?

To respect consumer privacy and use their data ethically, it’s important to drive AI regulations within advertising. Implementing and adhering to a strong ethical framework for AI usage is also critical.

The Publicis AI Trust Cycle

At Publicis, we’re committed to building trust by creating space for positive AI experiences. Our AI Trust Cycle is based on four pillars: brands, consumers, employees, and humanity.

1 Brand: Protect brand knowledge and intellectual property.

2 Consumer: Empower consumers by explaining how their data is used.

3 Employee: Educate and equip employees to leverage AI in their work.

4 Humanity: Advocate for ethical AI usage.

We believe that by focusing on these pillars, we can usher in a new era of trust in AI for advertising. It’s time to move beyond the fear and embrace the immense potential AI offers.

Holistic approach to build trust towards AI

In the end, AI in advertising is not just about technology. It’s about people — our brands, our consumers, our employees, and all of humanity. With a foundation of trust, we can turn AI from a source of fear into a powerful tool for growth, innovation, and positive change.

“Unlock Your Business Potential with Our AI Workshop!”

We’re offering a unique opportunity: a FREE AI-powered workshop to tackle your business challenges. But we need your input to make it as relevant as possible.

Complete this quick survey and help us tailor the workshop to YOUR needs.

Act fast, we’re selecting just two partners. Let’s innovate together!